Uncertainty¶

This notebook examines uncertainty in Atomica, going through

How uncertainty in model inputs is entered

How to perform simulations that are sampled from the distribution of parameters with uncertainty

How to perform analyses that incorporate uncertainty

Specifying uncertainty¶

Fundamentally, uncertainty enters Atomica via the data entry spreadsheets. Both the databook and program book have columns that can be used to enter uncertainty.

In the databook, uncertainty can be added to any TDVE table - that is, any of the sub-tables within the databook for each quantity. By default, those columns are not produced when the databook is written e.g. with

proj.data.save('databook.xlsx')

If you want to include those columns, use the write_uncertainty options:

proj.data.save('databook.xlsx',write_uncertainty=True)

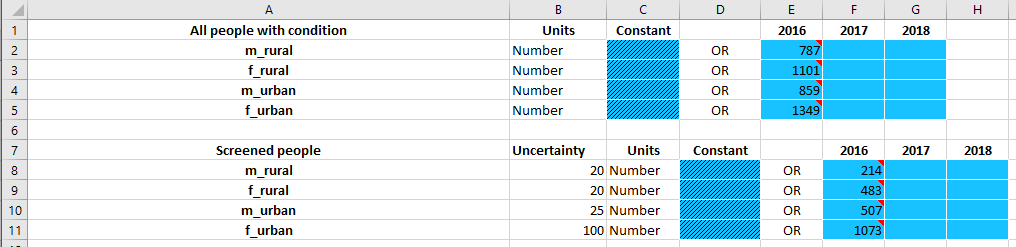

This will add an ‘uncertainty’ column to all tables in the databook. If you only want to add uncertainty to a small number of quantities, you can simply insert an extra column for the tables that you want to add uncertainty to, by selecting the other cells and moving them over. The example below shows how an uncertainty column has been added to the ‘Screened people’ table and not to the ‘All people with condition’ table:

Uncertainty should be entered as a standard deviation, with the same units as the quantity itself

In the example above, it would be accurate to state that the 2016 value for the number of screened people in the male rural population is \(214 \pm 20\). Mathematically, the entry above corresponds to specifying that the distribution of screened people in the male rural population has a mean of 214 and a standard deviation of 20.

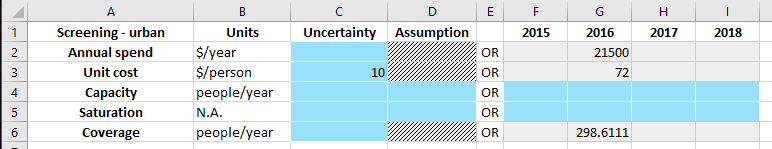

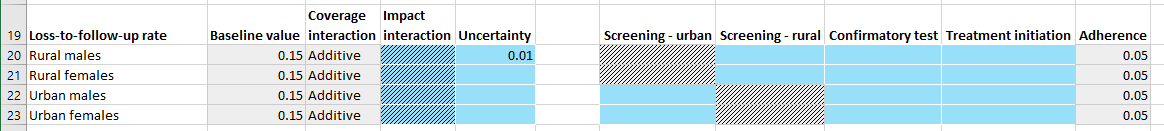

For the program book, uncertainty appears in two places - in the spending/coverage sheet, and in the outcome sheet, as shown below. These columns are always present in the program book.

To limit complexity in data entry, only a single uncertainty value can be entered for each quantity. That is, in the databook and spending/coverage sheet, uncertainty cannot vary over time, and in the outcome sheet, the uncertainty is specified at the parameter-population level, and applies to all programs reaching that parameter.

Sampling inputs¶

We have seen above how to enter uncertainty in the databook and program book. These uncertainties are loaded into the corresponding ProjectData and ProgramSet objects in Atomica.

[1]:

%load_ext autoreload

%autoreload 2

%matplotlib inline

import sys

sys.path.append('..')

[2]:

import atomica as at

import numpy as np

import matplotlib.pyplot as plt

from scipy import stats

import sciris as sc

np.random.seed(3)

testdir = '../../tests/'

P = at.Project(framework=testdir + 'test_uncertainty_framework.xlsx', databook=testdir + 'test_uncertainty_databook.xlsx')

P.load_progbook(testdir + 'test_uncertainty_high_progbook.xlsx')

low_uncertainty_progset = at.ProgramSet.from_spreadsheet(testdir + 'test_uncertainty_low_progbook.xlsx',project=P)

high_uncertainty_progset = at.ProgramSet.from_spreadsheet(testdir + 'test_uncertainty_high_progbook.xlsx',project=P)

default_budget = at.ProgramInstructions(start_year=2018, alloc=P.progsets[0])

doubled_budget = default_budget.scale_alloc(2)

WARNING {framework.py:1008} - Parameter "screen" is in rate units and a maximum value of "1" has been entered. Rates in the framework should generally not be limited to "1"

WARNING {framework.py:1008} - Parameter "diag" is in rate units and a maximum value of "1" has been entered. Rates in the framework should generally not be limited to "1"

WARNING {framework.py:1008} - Parameter "initiate" is in rate units and a maximum value of "1" has been entered. Rates in the framework should generally not be limited to "1"

WARNING {framework.py:1008} - Parameter "loss" is in rate units and a maximum value of "1" has been entered. Rates in the framework should generally not be limited to "1"

WARNING {framework.py:1008} - Parameter "fail_rate" is in rate units and a maximum value of "1" has been entered. Rates in the framework should generally not be limited to "1"

Elapsed time for running "default": 0.0221s

Above, we have loaded in a databook with uncertainty values, and also loaded in two different program books, one with low uncertainty, and one with high uncertainty. We can inspect the objects to see how the uncertainty entered in the spreadsheets is reflected in code.

[3]:

P.data.tdve['all_screened'].ts[0]

[3]:

<atomica.utils.TimeSeries at 0x7faea4447f60>

[<class 'atomica.utils.TimeSeries'>, <class 'object'>]

————————————————————————————————————————————————————————————

Methods:

copy() interpolate() remove_between()

get() remove() sample()

get_arrays() remove_after() insert()

remove_before()

————————————————————————————————————————————————————————————

Properties:

has_data has_time_data

————————————————————————————————————————————————————————————

_sampled: False

assumption: None

sigma: 20.0

t: [2016]

units: 'Number'

vals: [214.0]

————————————————————————————————————————————————————————————

The uncertainty value is attached to the underlying TimeSeries objects, and stored in the sigma property. When running a simulation, we specify a ParameterSet and optionally a ProgramSet:

[4]:

parset = P.parsets[0]

res = P.run_sim(parset)

d = at.PlotData(res,'all_screened',pops='m_rural')

at.plot_series(d);

Elapsed time for running "default": 0.0215s

If there is uncertainty, we can obtain a sampled epidemiological prediction by sampling from all of the quantities containing uncertinty. For example, replacing the value of 214 above with a sample from the distribution \(\ \mathcal{N}(214,20)\) - along with all of the other quantities. The input to the model is effectively a parameter set derived from the original set of parameters, but with values replaced by sampled values where appropriate. You can obtain a sampled parameter set

simply by calling the ParameterSet.sample() method:

[5]:

sampled_parset = parset.sample()

Now compare the values for the screening parameter in the original parset and the sampled parset:

[6]:

print('Original parameters: %.2f' % (parset.pars['all_screened'].ts['m_rural'].vals[0]))

print('Sampled parameters: %.2f' % (sampled_parset.pars['all_screened'].ts['m_rural'].vals[0]))

Original parameters: 214.00

Sampled parameters: 249.77

The original parameters contain the values entered in the databook, while the sampled parameters have the values perturbed by the sampling. Although the sampled parameters retain the original uncertainty values, you can only perform sampling once:

[7]:

try:

sampled_parset.sample()

except Exception as e:

print(e)

Sampling has already been performed - can only sample once

To run a sampled simulation, it is thus only necessary to pass the sampled ParameterSet into Project.run_sim instead of the original parameters:

[8]:

sampled_res = P.run_sim(sampled_parset,result_name='Sampled')

d = at.PlotData([res,sampled_res],'all_screened',pops='m_rural')

at.plot_series(d,axis='results');

Elapsed time for running "default": 0.0210s

Exactly the same procedure is used for programs - the ProgramSet has a sample() method which returns a sampled ProgramSet that can be used instead of the original ProgramSet to perform a sampled simulation:

[9]:

sampled_progset = high_uncertainty_progset.sample()

res = P.run_sim(parset,high_uncertainty_progset,progset_instructions=default_budget,result_name='Original')

sampled_res = P.run_sim(parset,sampled_progset,progset_instructions=default_budget,result_name='Sampled')

d = at.PlotData([res,sampled_res],'all_screened',pops='m_rural')

at.plot_series(d,axis='results');

Elapsed time for running "default": 0.0277s

Elapsed time for running "default": 0.0286s

Of course, we can combine the sampled parset and the sampled progset in a single simulation to sample from both sources of uncertainty simultaneously:

[10]:

sampled_res = P.run_sim(sampled_parset,sampled_progset,progset_instructions=default_budget,result_name='Sampled')

d = at.PlotData([res,sampled_res],'all_screened',pops='m_rural')

at.plot_series(d,axis='results');

Elapsed time for running "default": 0.0282s

One issue when sampling is that of satisfying initial conditions. Databooks often require some work to ensure that none of the initial compartment sizes are negative. If uncertainties are specified for characteristics that are used for initialization, it is possible that the independently-sampled characteristic values yield a bad initialization (where some of the compartment sizes are negative, or the characteristic values are otherwise inconsistent). If this happens, a BadInitialization

error is raised. Therefore, to write reliable sampling code, it is essential to catch this exception:

[11]:

np.random.seed(1) # This seed provides a BadInitialization at the next iteration

sampled_parset = parset.sample()

try:

sampled_res = P.run_sim(sampled_parset)

except at.BadInitialization as e:

print(e)

Negative initial popsizes:

Compartment f_rural scr - Calculated -11.235128

Characteristic 'all_people': Target value = [1101.]

Compartment undx: Computed value = 630.235128

Compartment scr: Computed value = -11.235128

Compartment dx: Computed value = 100.000000

Compartment tx: Computed value = 234.000000

Compartment con: Computed value = 148.000000

Characteristic 'all_screened': Target value = [470.76487173]

Compartment scr: Computed value = -11.235128

Compartment dx: Computed value = 100.000000

Compartment tx: Computed value = 234.000000

Compartment con: Computed value = 148.000000

When this error occurs, the correct thing to do is usually to just sample again on the assumption that other samples may satisfy the initial conditions. Thus, sampling would be carried out within a while loop that continues until a simulation is successfully run. To automate this, we can use Project.run_sampled_sims which functions similarly to Project.run_sim() except that it incorporates a sampling step together with automated resampling if the initial conditions are unsatisfactory

[12]:

sampled_res = P.run_sampled_sims(parset)

print(sampled_res)

Elapsed time for running "default": 0.0198s

[[<atomica.results.Result at 0x7faee1a4c0d0>

[<class 'atomica.results.Result'>, <class 'atomica.utils.NamedItem'>, <class 'object'>]

————————————————————————————————————————————————————————————

Methods:

charac_names() export_raw() get_variable()

check_for_nans() get_alloc() link_names()

comp_names() get_coverage() par_names()

copy() get_equivalent_... plot()

————————————————————————————————————————————————————————————

Properties:

dt pop_labels used_programs

framework t

————————————————————————————————————————————————————————————

created: datetime.datetime(2024, 2, 5, 19, 36, 47, 488190,

tzinfo=tzutc())

gitinfo: {'branch': 'Detached head (no branch)', 'hash':

'17efbee', 'date': '2 [...]

model: <atomica.model.Model object at 0x7faea17b52d0>

modified: datetime.datetime(2024, 2, 5, 19, 36, 47, 488217,

tzinfo=tzutc())

name: 'default'

parset_name: 'default'

pop_names: ['m_rural', 'f_rural', 'm_urban', 'f_urban']

uid: UUID('70e871c5-8f4f-4ba4-ab1f-edbbfdc9d6cc')

version: '1.28.1'

————————————————————————————————————————————————————————————

]]

Note that the return value is a list of results, rather than just a Result like with Project.run_sim(). Typically, uncertainties require working with multiple samples. Thus, you can also pass in a number of samples, and Project.run_sampled_sims() will return the required number of sampled results:

[13]:

sampled_res = P.run_sampled_sims(parset,n_samples=5)

print(len(sampled_res))

- more-to-come:

- class:

stderr

- 0%| | 0/5 [00:00<?, ?it/s]

</pre>

- 0%| | 0/5 [00:00<?, ?it/s]

end{sphinxVerbatim}

0%| | 0/5 [00:00<?, ?it/s]

- more-to-come:

- class:

stderr

- 80%|████████ | 4/5 [00:00<00:00, 35.02it/s]

</pre>

- 80%|████████ | 4/5 [00:00<00:00, 35.02it/s]

end{sphinxVerbatim}

80%|████████ | 4/5 [00:00<00:00, 35.02it/s]

- 100%|██████████| 5/5 [00:00<00:00, 36.36it/s]

</pre>

- 100%|██████████| 5/5 [00:00<00:00, 36.36it/s]

end{sphinxVerbatim}

100%|██████████| 5/5 [00:00<00:00, 36.36it/s]

5

When running multiple sampled simulations, a progress bar with estimated time remaining will be displayed.

Analysis¶

Now that we are able to run simulations with sampled parameters, the next question is how to produce analysis outputs such as plots that have error bars or otherwise visually represent uncertainty. The first step to doing this is to obtain a collection of results. The number of samples required loosely depends on the number of quantities with uncertainty - a general rule of thumb though is that the number of samples required would generally be on the order of 50-200.

[14]:

sampled_res = P.run_sampled_sims(parset,n_samples=100)

- more-to-come:

- class:

stderr

- 0%| | 0/100 [00:00<?, ?it/s]

</pre>

- 0%| | 0/100 [00:00<?, ?it/s]

end{sphinxVerbatim}

0%| | 0/100 [00:00<?, ?it/s]

- more-to-come:

- class:

stderr

- 3%|▎ | 3/100 [00:00<00:03, 28.19it/s]

</pre>

- 3%|▎ | 3/100 [00:00<00:03, 28.19it/s]

end{sphinxVerbatim}

3%|▎ | 3/100 [00:00<00:03, 28.19it/s]

- more-to-come:

- class:

stderr

- 6%|▌ | 6/100 [00:00<00:03, 26.36it/s]

</pre>

- 6%|▌ | 6/100 [00:00<00:03, 26.36it/s]

end{sphinxVerbatim}

6%|▌ | 6/100 [00:00<00:03, 26.36it/s]

- more-to-come:

- class:

stderr

- 9%|▉ | 9/100 [00:00<00:05, 17.68it/s]

</pre>

- 9%|▉ | 9/100 [00:00<00:05, 17.68it/s]

end{sphinxVerbatim}

9%|▉ | 9/100 [00:00<00:05, 17.68it/s]

- more-to-come:

- class:

stderr

- 13%|█▎ | 13/100 [00:00<00:03, 23.75it/s]

</pre>

- 13%|█▎ | 13/100 [00:00<00:03, 23.75it/s]

end{sphinxVerbatim}

13%|█▎ | 13/100 [00:00<00:03, 23.75it/s]

- more-to-come:

- class:

stderr

- 16%|█▌ | 16/100 [00:00<00:03, 25.34it/s]

</pre>

- 16%|█▌ | 16/100 [00:00<00:03, 25.34it/s]

end{sphinxVerbatim}

16%|█▌ | 16/100 [00:00<00:03, 25.34it/s]

- more-to-come:

- class:

stderr

- 20%|██ | 20/100 [00:00<00:02, 28.34it/s]

</pre>

- 20%|██ | 20/100 [00:00<00:02, 28.34it/s]

end{sphinxVerbatim}

20%|██ | 20/100 [00:00<00:02, 28.34it/s]

- more-to-come:

- class:

stderr

- 25%|██▌ | 25/100 [00:00<00:02, 31.24it/s]

</pre>

- 25%|██▌ | 25/100 [00:00<00:02, 31.24it/s]

end{sphinxVerbatim}

25%|██▌ | 25/100 [00:00<00:02, 31.24it/s]

- more-to-come:

- class:

stderr

- 29%|██▉ | 29/100 [00:01<00:02, 28.74it/s]

</pre>

- 29%|██▉ | 29/100 [00:01<00:02, 28.74it/s]

end{sphinxVerbatim}

29%|██▉ | 29/100 [00:01<00:02, 28.74it/s]

- more-to-come:

- class:

stderr

- 32%|███▏ | 32/100 [00:01<00:02, 27.21it/s]

</pre>

- 32%|███▏ | 32/100 [00:01<00:02, 27.21it/s]

end{sphinxVerbatim}

32%|███▏ | 32/100 [00:01<00:02, 27.21it/s]

- more-to-come:

- class:

stderr

- 36%|███▌ | 36/100 [00:01<00:02, 29.52it/s]

</pre>

- 36%|███▌ | 36/100 [00:01<00:02, 29.52it/s]

end{sphinxVerbatim}

36%|███▌ | 36/100 [00:01<00:02, 29.52it/s]

- more-to-come:

- class:

stderr

- 40%|████ | 40/100 [00:01<00:01, 30.03it/s]

</pre>

- 40%|████ | 40/100 [00:01<00:01, 30.03it/s]

end{sphinxVerbatim}

40%|████ | 40/100 [00:01<00:01, 30.03it/s]

- more-to-come:

- class:

stderr

- 45%|████▌ | 45/100 [00:01<00:01, 28.36it/s]

</pre>

- 45%|████▌ | 45/100 [00:01<00:01, 28.36it/s]

end{sphinxVerbatim}

45%|████▌ | 45/100 [00:01<00:01, 28.36it/s]

- more-to-come:

- class:

stderr

- 49%|████▉ | 49/100 [00:01<00:01, 30.50it/s]

</pre>

- 49%|████▉ | 49/100 [00:01<00:01, 30.50it/s]

end{sphinxVerbatim}

49%|████▉ | 49/100 [00:01<00:01, 30.50it/s]

- more-to-come:

- class:

stderr

- 54%|█████▍ | 54/100 [00:01<00:01, 31.16it/s]

</pre>

- 54%|█████▍ | 54/100 [00:01<00:01, 31.16it/s]

end{sphinxVerbatim}

54%|█████▍ | 54/100 [00:01<00:01, 31.16it/s]

- more-to-come:

- class:

stderr

- 58%|█████▊ | 58/100 [00:02<00:01, 29.33it/s]

</pre>

- 58%|█████▊ | 58/100 [00:02<00:01, 29.33it/s]

end{sphinxVerbatim}

58%|█████▊ | 58/100 [00:02<00:01, 29.33it/s]

- more-to-come:

- class:

stderr

- 61%|██████ | 61/100 [00:02<00:01, 28.07it/s]

</pre>

- 61%|██████ | 61/100 [00:02<00:01, 28.07it/s]

end{sphinxVerbatim}

61%|██████ | 61/100 [00:02<00:01, 28.07it/s]

- more-to-come:

- class:

stderr

- 66%|██████▌ | 66/100 [00:02<00:01, 30.28it/s]

</pre>

- 66%|██████▌ | 66/100 [00:02<00:01, 30.28it/s]

end{sphinxVerbatim}

66%|██████▌ | 66/100 [00:02<00:01, 30.28it/s]

- more-to-come:

- class:

stderr

- 70%|███████ | 70/100 [00:02<00:00, 31.74it/s]

</pre>

- 70%|███████ | 70/100 [00:02<00:00, 31.74it/s]

end{sphinxVerbatim}

70%|███████ | 70/100 [00:02<00:00, 31.74it/s]

- more-to-come:

- class:

stderr

- 74%|███████▍ | 74/100 [00:02<00:01, 24.13it/s]

</pre>

- 74%|███████▍ | 74/100 [00:02<00:01, 24.13it/s]

end{sphinxVerbatim}

74%|███████▍ | 74/100 [00:02<00:01, 24.13it/s]

- more-to-come:

- class:

stderr

- 77%|███████▋ | 77/100 [00:02<00:00, 25.27it/s]

</pre>

- 77%|███████▋ | 77/100 [00:02<00:00, 25.27it/s]

end{sphinxVerbatim}

77%|███████▋ | 77/100 [00:02<00:00, 25.27it/s]

- more-to-come:

- class:

stderr

- 80%|████████ | 80/100 [00:02<00:00, 22.69it/s]

</pre>

- 80%|████████ | 80/100 [00:02<00:00, 22.69it/s]

end{sphinxVerbatim}

80%|████████ | 80/100 [00:02<00:00, 22.69it/s]

- more-to-come:

- class:

stderr

- 83%|████████▎ | 83/100 [00:03<00:00, 23.67it/s]

</pre>

- 83%|████████▎ | 83/100 [00:03<00:00, 23.67it/s]

end{sphinxVerbatim}

83%|████████▎ | 83/100 [00:03<00:00, 23.67it/s]

- more-to-come:

- class:

stderr

- 87%|████████▋ | 87/100 [00:03<00:00, 27.44it/s]

</pre>

- 87%|████████▋ | 87/100 [00:03<00:00, 27.44it/s]

end{sphinxVerbatim}

87%|████████▋ | 87/100 [00:03<00:00, 27.44it/s]

- more-to-come:

- class:

stderr

- 90%|█████████ | 90/100 [00:03<00:00, 27.95it/s]

</pre>

- 90%|█████████ | 90/100 [00:03<00:00, 27.95it/s]

end{sphinxVerbatim}

90%|█████████ | 90/100 [00:03<00:00, 27.95it/s]

- more-to-come:

- class:

stderr

- 93%|█████████▎| 93/100 [00:03<00:00, 28.39it/s]

</pre>

- 93%|█████████▎| 93/100 [00:03<00:00, 28.39it/s]

end{sphinxVerbatim}

93%|█████████▎| 93/100 [00:03<00:00, 28.39it/s]

- more-to-come:

- class:

stderr

- 96%|█████████▌| 96/100 [00:03<00:00, 26.94it/s]

</pre>

- 96%|█████████▌| 96/100 [00:03<00:00, 26.94it/s]

end{sphinxVerbatim}

96%|█████████▌| 96/100 [00:03<00:00, 26.94it/s]

- 100%|██████████| 100/100 [00:03<00:00, 27.80it/s]

</pre>

- 100%|██████████| 100/100 [00:03<00:00, 27.80it/s]

end{sphinxVerbatim}

100%|██████████| 100/100 [00:03<00:00, 27.80it/s]

For comparison, we will also run a ‘baseline’ simulation with no sampling. A plot of the baseline results looks like this:

[15]:

baseline = P.run_sim(parset)

d = at.PlotData(baseline,outputs=['screen','diag','initiate'],pops='m_rural')

at.plot_series(d);

Elapsed time for running "default": 0.0207s

Basic plotting¶

The normal sequence for plotting is that we would

Instantiate a

PlotDataobject with the quantities to plot. Effectively, this operation is mapping aResultto aPlotDataCall a plotting library function to generate the plot

Plots generated with the plotting library compare multiple results (e.g. different scenarios) rather than showing uncertainty computed over an ensemble of results. Further, they only take in a single PlotData instance where the results to compare have been included in the same PlotData object.

To work with uncertainty, instead of plotting a single PlotData object, we instead plot a collection of PlotData objects. And plots are generated using a different set of plotting functions that take in multiple PlotData instances and use them to compute and display uncertainties (e.g. as error bars or as shaded areas).

This functionality is accessed via the Ensemble class. The Ensemble class is designed to store and plot ensembles of results. Internally, it stores a list of PlotData objects - one for each sample. It also contains a ‘mapping function’ that takes in results and returns a PlotData instance. To use an Ensemble, first we need to define a mapping function. In the simplest case, this looks just like a normal call to PlotData():

[16]:

mapping_function = lambda x: at.PlotData(x,outputs=['screen','diag','initiate'],pops='m_rural')

Then, we create an Ensemble:

[17]:

ensemble = at.Ensemble(mapping_function=mapping_function)

We then need to load all of the results into the mapping function. Similar to Python’s built-in set class, there are two ways of inserting the results

One by one, using

Ensemble.add(result)All at once, using

Ensemble.update(list_of_results)

In our case, we already have a list of results generated by P.run_sampled_sims, so we can go ahead and use Ensemble.update() to insert them

[18]:

ensemble.update(sampled_res)

Finally, we can make some plots. To start with, let’s make a plot of the time series like the one shown above for the baseline results, but with uncertainty this time:

[19]:

ensemble.plot_series();

As we have only loaded in the sampled results, the dashed line corresponds to the median of the samples. The shaded areas show the area between the first and third quartiles for the samples. To start with, instead of showing the median of the samples, it is generally more appropriate to show the actual baseline results. To do this, we can load the baseline results into the Ensemble and then regenerate the plot.

The baseline results need to have the same names as the sampled results. If you do not explicitly specify names, Project.run_sampled_sims produces default names that avoid collisions between multiple instructions. However, Project.run_sim produces default names that avoid collisions with other results stored in the project. Therefore, it may be necessary to explicitly set or change the result names if the names were set differently.

In this case, the baseline results need to have their name changed to 'default' to match the sampled results:

[20]:

baseline.name = 'default'

ensemble.set_baseline(baseline)

ensemble.plot_series();

As with the rest of the plotting library, the legend is ‘maximally informative’ in that it contains as much information as possible. This is because the most appropriate labelling for the figure (whether the axis labels, titles, or legend entries) varies greatly with context, and it is often necessary to relabel the figure on a plot-by-plot basis. This relabelling is facilitating by having all of the necessary content on the figure, rather than having to keep track of it separately. Thus, it is expected that the labels may need to be edited afterwards depending on usage.

The '<unnamed'> string corresponds to the name of the ensemble. We hadn’t given the ensemble a name, but naming the ensemble can be useful if you want to superimpose multiple ensembles on the same plot. The ensemble can be named at construction, or at any point subsequently:

[21]:

ensemble.name = 'Example'

ensemble.plot_series();

Now, the baseline results are displayed as solid lines. Other types of plots can be generated too.

[22]:

ensemble.plot_bars(years=2018)

[22]:

Because the Ensemble stores PlotData objects, you can perform any aggregation operations in the same way as normal. For example, we can plot the years lost to disability by integrating the number of people in the disease compartments.

[23]:

yld = lambda x: at.PlotData(x,outputs={'disease':['undx','scr','dx','tx']},t_bins=[2018,2023],time_aggregation='integrate')

This is the usual way that this plot would be generated for a single Result without uncertainty, and indeed we can go ahead and generate that plot now

[24]:

d = yld(baseline)

at.plot_bars(d);

With uncertainty, we simply make the plot via an Ensemble instead

[25]:

ensemble = at.Ensemble(name='Example',mapping_function=yld,baseline_results=baseline)

ensemble.update(sampled_res)

ensemble.plot_bars()

[25]:

You can also use the Ensemble.summary_statistics() method to get a dataframe with summary statistics

[26]:

ensemble.summary_statistics()

[26]:

| value | |||||

|---|---|---|---|---|---|

| year | result | output | pop | quantity | |

| NaN | default | disease | m_rural | baseline | 3362.999052 |

| mean | 3362.689865 | ||||

| median | 3362.229674 | ||||

| max | 3383.501089 | ||||

| min | 3348.031225 | ||||

| Q1 | 3357.640676 | ||||

| Q3 | 3367.016381 | ||||

| f_rural | baseline | 4175.364625 | |||

| mean | 4174.657501 | ||||

| median | 4173.735033 | ||||

| max | 4217.624374 | ||||

| min | 4132.233303 | ||||

| Q1 | 4166.075537 | ||||

| Q3 | 4185.514899 | ||||

| m_urban | baseline | 3396.464712 | |||

| mean | 3397.135096 | ||||

| median | 3397.719692 | ||||

| max | 3419.841094 | ||||

| min | 3371.550566 | ||||

| Q1 | 3391.851250 | ||||

| Q3 | 3404.597237 | ||||

| f_urban | baseline | 4968.772456 | |||

| mean | 4968.806673 | ||||

| median | 4966.767523 | ||||

| max | 5016.001787 | ||||

| min | 4911.368454 | ||||

| Q1 | 4954.877096 | ||||

| Q3 | 4983.216754 |

Comparing sampled results¶

Moving up in complexity, a common analysis task is comparing results across scenarios. At the beginning, we instantiated two sets of instructions - one with default spending, and one with doubled spending. We will now produce samples from both of these instructions. There are two ways to approach this problem

Storing the results in separate Ensembles

Putting multiple results into a single Ensemble

To perform the analysis most validly, it is important to run the budget scenario in each of the instructions for the same parset and progset samples. That is, to generate a single result, it is necessary to first sample, and then use the same samples for all instructions. Written out explicitly, this would be

[27]:

np.random.seed(3)

sampled_parset = parset.sample()

sampled_progset = high_uncertainty_progset.sample()

result_default_spend = P.run_sim(sampled_parset,sampled_progset,default_budget,result_name='Baseline')

result_doubled_spend = P.run_sim(sampled_parset,sampled_progset,doubled_budget,result_name='Doubled budget')

Elapsed time for running "default": 0.0282s

Elapsed time for running "default": 0.0277s

Comparing results across budget scenarios is extremely common, so Atomica has built-in functionality to facilitate this. You can pass multiple instructions and result names to Project.run_sampled_sims() to generate results as shown above

[28]:

sampled_res = P.run_sampled_sims(parset,high_uncertainty_progset,n_samples=100,progset_instructions=[default_budget,doubled_budget],result_names=['Baseline','Doubled budget'])

print(len(sampled_res))

- more-to-come:

- class:

stderr

- 0%| | 0/100 [00:00<?, ?it/s]

</pre>

- 0%| | 0/100 [00:00<?, ?it/s]

end{sphinxVerbatim}

0%| | 0/100 [00:00<?, ?it/s]

- more-to-come:

- class:

stderr

- 1%| | 1/100 [00:00<00:10, 9.73it/s]

</pre>

- 1%| | 1/100 [00:00<00:10, 9.73it/s]

end{sphinxVerbatim}

1%| | 1/100 [00:00<00:10, 9.73it/s]

- more-to-come:

- class:

stderr

- 3%|▎ | 3/100 [00:00<00:07, 13.20it/s]

</pre>

- 3%|▎ | 3/100 [00:00<00:07, 13.20it/s]

end{sphinxVerbatim}

3%|▎ | 3/100 [00:00<00:07, 13.20it/s]

- more-to-come:

- class:

stderr

- 5%|▌ | 5/100 [00:00<00:10, 8.77it/s]

</pre>

- 5%|▌ | 5/100 [00:00<00:10, 8.77it/s]

end{sphinxVerbatim}

5%|▌ | 5/100 [00:00<00:10, 8.77it/s]

- more-to-come:

- class:

stderr

- 7%|▋ | 7/100 [00:00<00:09, 10.28it/s]

</pre>

- 7%|▋ | 7/100 [00:00<00:09, 10.28it/s]

end{sphinxVerbatim}

7%|▋ | 7/100 [00:00<00:09, 10.28it/s]

- more-to-come:

- class:

stderr

- 9%|▉ | 9/100 [00:00<00:07, 11.57it/s]

</pre>

- 9%|▉ | 9/100 [00:00<00:07, 11.57it/s]

end{sphinxVerbatim}

9%|▉ | 9/100 [00:00<00:07, 11.57it/s]

- more-to-come:

- class:

stderr

- 11%|█ | 11/100 [00:00<00:07, 12.60it/s]

</pre>

- 11%|█ | 11/100 [00:00<00:07, 12.60it/s]

end{sphinxVerbatim}

11%|█ | 11/100 [00:00<00:07, 12.60it/s]

- more-to-come:

- class:

stderr

- 13%|█▎ | 13/100 [00:01<00:06, 12.52it/s]

</pre>

- 13%|█▎ | 13/100 [00:01<00:06, 12.52it/s]

end{sphinxVerbatim}

13%|█▎ | 13/100 [00:01<00:06, 12.52it/s]

- more-to-come:

- class:

stderr

- 15%|█▌ | 15/100 [00:01<00:06, 13.62it/s]

</pre>

- 15%|█▌ | 15/100 [00:01<00:06, 13.62it/s]

end{sphinxVerbatim}

15%|█▌ | 15/100 [00:01<00:06, 13.62it/s]

- more-to-come:

- class:

stderr

- 17%|█▋ | 17/100 [00:01<00:05, 14.02it/s]

</pre>

- 17%|█▋ | 17/100 [00:01<00:05, 14.02it/s]

end{sphinxVerbatim}

17%|█▋ | 17/100 [00:01<00:05, 14.02it/s]

- more-to-come:

- class:

stderr

- 19%|█▉ | 19/100 [00:01<00:05, 13.89it/s]

</pre>

- 19%|█▉ | 19/100 [00:01<00:05, 13.89it/s]

end{sphinxVerbatim}

19%|█▉ | 19/100 [00:01<00:05, 13.89it/s]

- more-to-come:

- class:

stderr

- 21%|██ | 21/100 [00:01<00:05, 13.29it/s]

</pre>

- 21%|██ | 21/100 [00:01<00:05, 13.29it/s]

end{sphinxVerbatim}

21%|██ | 21/100 [00:01<00:05, 13.29it/s]

- more-to-come:

- class:

stderr

- 23%|██▎ | 23/100 [00:01<00:05, 14.22it/s]

</pre>

- 23%|██▎ | 23/100 [00:01<00:05, 14.22it/s]

end{sphinxVerbatim}

23%|██▎ | 23/100 [00:01<00:05, 14.22it/s]

- more-to-come:

- class:

stderr

- 25%|██▌ | 25/100 [00:01<00:05, 14.00it/s]

</pre>

- 25%|██▌ | 25/100 [00:01<00:05, 14.00it/s]

end{sphinxVerbatim}

25%|██▌ | 25/100 [00:01<00:05, 14.00it/s]

- more-to-come:

- class:

stderr

- 27%|██▋ | 27/100 [00:02<00:04, 14.79it/s]

</pre>

- 27%|██▋ | 27/100 [00:02<00:04, 14.79it/s]

end{sphinxVerbatim}

27%|██▋ | 27/100 [00:02<00:04, 14.79it/s]

- more-to-come:

- class:

stderr

- 29%|██▉ | 29/100 [00:02<00:05, 13.51it/s]

</pre>

- 29%|██▉ | 29/100 [00:02<00:05, 13.51it/s]

end{sphinxVerbatim}

29%|██▉ | 29/100 [00:02<00:05, 13.51it/s]

- more-to-come:

- class:

stderr

- 31%|███ | 31/100 [00:02<00:05, 12.82it/s]

</pre>

- 31%|███ | 31/100 [00:02<00:05, 12.82it/s]

end{sphinxVerbatim}

31%|███ | 31/100 [00:02<00:05, 12.82it/s]

- more-to-come:

- class:

stderr

- 33%|███▎ | 33/100 [00:02<00:05, 12.41it/s]

</pre>

- 33%|███▎ | 33/100 [00:02<00:05, 12.41it/s]

end{sphinxVerbatim}

33%|███▎ | 33/100 [00:02<00:05, 12.41it/s]

- more-to-come:

- class:

stderr

- 35%|███▌ | 35/100 [00:02<00:05, 12.74it/s]

</pre>

- 35%|███▌ | 35/100 [00:02<00:05, 12.74it/s]

end{sphinxVerbatim}

35%|███▌ | 35/100 [00:02<00:05, 12.74it/s]

- more-to-come:

- class:

stderr

- 37%|███▋ | 37/100 [00:03<00:06, 9.72it/s]

</pre>

- 37%|███▋ | 37/100 [00:03<00:06, 9.72it/s]

end{sphinxVerbatim}

37%|███▋ | 37/100 [00:03<00:06, 9.72it/s]

- more-to-come:

- class:

stderr

- 39%|███▉ | 39/100 [00:03<00:05, 10.45it/s]

</pre>

- 39%|███▉ | 39/100 [00:03<00:05, 10.45it/s]

end{sphinxVerbatim}

39%|███▉ | 39/100 [00:03<00:05, 10.45it/s]

- more-to-come:

- class:

stderr

- 41%|████ | 41/100 [00:03<00:05, 11.61it/s]

</pre>

- 41%|████ | 41/100 [00:03<00:05, 11.61it/s]

end{sphinxVerbatim}

41%|████ | 41/100 [00:03<00:05, 11.61it/s]

- more-to-come:

- class:

stderr

- 43%|████▎ | 43/100 [00:03<00:04, 12.16it/s]

</pre>

- 43%|████▎ | 43/100 [00:03<00:04, 12.16it/s]

end{sphinxVerbatim}

43%|████▎ | 43/100 [00:03<00:04, 12.16it/s]

- more-to-come:

- class:

stderr

- 45%|████▌ | 45/100 [00:03<00:04, 13.34it/s]

</pre>

- 45%|████▌ | 45/100 [00:03<00:04, 13.34it/s]

end{sphinxVerbatim}

45%|████▌ | 45/100 [00:03<00:04, 13.34it/s]

- more-to-come:

- class:

stderr

- 47%|████▋ | 47/100 [00:03<00:03, 14.26it/s]

</pre>

- 47%|████▋ | 47/100 [00:03<00:03, 14.26it/s]

end{sphinxVerbatim}

47%|████▋ | 47/100 [00:03<00:03, 14.26it/s]

- more-to-come:

- class:

stderr

- 49%|████▉ | 49/100 [00:03<00:03, 12.86it/s]

</pre>

- 49%|████▉ | 49/100 [00:03<00:03, 12.86it/s]

end{sphinxVerbatim}

49%|████▉ | 49/100 [00:03<00:03, 12.86it/s]

- more-to-come:

- class:

stderr

- 51%|█████ | 51/100 [00:04<00:03, 13.17it/s]

</pre>

- 51%|█████ | 51/100 [00:04<00:03, 13.17it/s]

end{sphinxVerbatim}

51%|█████ | 51/100 [00:04<00:03, 13.17it/s]

- more-to-come:

- class:

stderr

- 53%|█████▎ | 53/100 [00:04<00:03, 12.54it/s]

</pre>

- 53%|█████▎ | 53/100 [00:04<00:03, 12.54it/s]

end{sphinxVerbatim}

53%|█████▎ | 53/100 [00:04<00:03, 12.54it/s]

- more-to-come:

- class:

stderr

- 55%|█████▌ | 55/100 [00:04<00:03, 12.82it/s]

</pre>

- 55%|█████▌ | 55/100 [00:04<00:03, 12.82it/s]

end{sphinxVerbatim}

55%|█████▌ | 55/100 [00:04<00:03, 12.82it/s]

- more-to-come:

- class:

stderr

- 57%|█████▋ | 57/100 [00:04<00:03, 13.87it/s]

</pre>

- 57%|█████▋ | 57/100 [00:04<00:03, 13.87it/s]

end{sphinxVerbatim}

57%|█████▋ | 57/100 [00:04<00:03, 13.87it/s]

- more-to-come:

- class:

stderr

- 59%|█████▉ | 59/100 [00:04<00:02, 13.79it/s]

</pre>

- 59%|█████▉ | 59/100 [00:04<00:02, 13.79it/s]

end{sphinxVerbatim}

59%|█████▉ | 59/100 [00:04<00:02, 13.79it/s]

- more-to-come:

- class:

stderr

- 61%|██████ | 61/100 [00:04<00:03, 11.52it/s]

</pre>

- 61%|██████ | 61/100 [00:04<00:03, 11.52it/s]

end{sphinxVerbatim}

61%|██████ | 61/100 [00:04<00:03, 11.52it/s]

- more-to-come:

- class:

stderr

- 63%|██████▎ | 63/100 [00:05<00:02, 12.43it/s]

</pre>

- 63%|██████▎ | 63/100 [00:05<00:02, 12.43it/s]

end{sphinxVerbatim}

63%|██████▎ | 63/100 [00:05<00:02, 12.43it/s]

- more-to-come:

- class:

stderr

- 65%|██████▌ | 65/100 [00:05<00:02, 12.77it/s]

</pre>

- 65%|██████▌ | 65/100 [00:05<00:02, 12.77it/s]

end{sphinxVerbatim}

65%|██████▌ | 65/100 [00:05<00:02, 12.77it/s]

- more-to-come:

- class:

stderr

- 67%|██████▋ | 67/100 [00:05<00:02, 13.42it/s]

</pre>

- 67%|██████▋ | 67/100 [00:05<00:02, 13.42it/s]

end{sphinxVerbatim}

67%|██████▋ | 67/100 [00:05<00:02, 13.42it/s]

- more-to-come:

- class:

stderr

- 69%|██████▉ | 69/100 [00:05<00:02, 14.35it/s]

</pre>

- 69%|██████▉ | 69/100 [00:05<00:02, 14.35it/s]

end{sphinxVerbatim}

69%|██████▉ | 69/100 [00:05<00:02, 14.35it/s]

- more-to-come:

- class:

stderr

- 71%|███████ | 71/100 [00:05<00:01, 14.56it/s]

</pre>

- 71%|███████ | 71/100 [00:05<00:01, 14.56it/s]

end{sphinxVerbatim}

71%|███████ | 71/100 [00:05<00:01, 14.56it/s]

- more-to-come:

- class:

stderr

- 73%|███████▎ | 73/100 [00:05<00:01, 14.17it/s]

</pre>

- 73%|███████▎ | 73/100 [00:05<00:01, 14.17it/s]

end{sphinxVerbatim}

73%|███████▎ | 73/100 [00:05<00:01, 14.17it/s]

- more-to-come:

- class:

stderr

- 75%|███████▌ | 75/100 [00:06<00:02, 9.66it/s]

</pre>

- 75%|███████▌ | 75/100 [00:06<00:02, 9.66it/s]

end{sphinxVerbatim}

75%|███████▌ | 75/100 [00:06<00:02, 9.66it/s]

- more-to-come:

- class:

stderr

- 77%|███████▋ | 77/100 [00:06<00:02, 10.59it/s]

</pre>

- 77%|███████▋ | 77/100 [00:06<00:02, 10.59it/s]

end{sphinxVerbatim}

77%|███████▋ | 77/100 [00:06<00:02, 10.59it/s]

- more-to-come:

- class:

stderr

- 79%|███████▉ | 79/100 [00:06<00:01, 11.66it/s]

</pre>

- 79%|███████▉ | 79/100 [00:06<00:01, 11.66it/s]

end{sphinxVerbatim}

79%|███████▉ | 79/100 [00:06<00:01, 11.66it/s]

- more-to-come:

- class:

stderr

- 81%|████████ | 81/100 [00:06<00:01, 11.29it/s]

</pre>

- 81%|████████ | 81/100 [00:06<00:01, 11.29it/s]

end{sphinxVerbatim}

81%|████████ | 81/100 [00:06<00:01, 11.29it/s]

- more-to-come:

- class:

stderr

- 83%|████████▎ | 83/100 [00:06<00:01, 11.28it/s]

</pre>

- 83%|████████▎ | 83/100 [00:06<00:01, 11.28it/s]

end{sphinxVerbatim}

83%|████████▎ | 83/100 [00:06<00:01, 11.28it/s]

- more-to-come:

- class:

stderr

- 85%|████████▌ | 85/100 [00:06<00:01, 11.36it/s]

</pre>

- 85%|████████▌ | 85/100 [00:06<00:01, 11.36it/s]

end{sphinxVerbatim}

85%|████████▌ | 85/100 [00:06<00:01, 11.36it/s]

- more-to-come:

- class:

stderr

- 87%|████████▋ | 87/100 [00:06<00:01, 12.71it/s]

</pre>

- 87%|████████▋ | 87/100 [00:06<00:01, 12.71it/s]

end{sphinxVerbatim}

87%|████████▋ | 87/100 [00:06<00:01, 12.71it/s]

- more-to-come:

- class:

stderr

- 89%|████████▉ | 89/100 [00:07<00:00, 11.89it/s]

</pre>

- 89%|████████▉ | 89/100 [00:07<00:00, 11.89it/s]

end{sphinxVerbatim}

89%|████████▉ | 89/100 [00:07<00:00, 11.89it/s]

- more-to-come:

- class:

stderr

- 91%|█████████ | 91/100 [00:07<00:00, 13.15it/s]

</pre>

- 91%|█████████ | 91/100 [00:07<00:00, 13.15it/s]

end{sphinxVerbatim}

91%|█████████ | 91/100 [00:07<00:00, 13.15it/s]

- more-to-come:

- class:

stderr

- 93%|█████████▎| 93/100 [00:07<00:00, 13.25it/s]

</pre>

- 93%|█████████▎| 93/100 [00:07<00:00, 13.25it/s]

end{sphinxVerbatim}

93%|█████████▎| 93/100 [00:07<00:00, 13.25it/s]

- more-to-come:

- class:

stderr

- 95%|█████████▌| 95/100 [00:07<00:00, 14.12it/s]

</pre>

- 95%|█████████▌| 95/100 [00:07<00:00, 14.12it/s]

end{sphinxVerbatim}

95%|█████████▌| 95/100 [00:07<00:00, 14.12it/s]

- more-to-come:

- class:

stderr

- 97%|█████████▋| 97/100 [00:07<00:00, 13.00it/s]

</pre>

- 97%|█████████▋| 97/100 [00:07<00:00, 13.00it/s]

end{sphinxVerbatim}

97%|█████████▋| 97/100 [00:07<00:00, 13.00it/s]

- more-to-come:

- class:

stderr

- 99%|█████████▉| 99/100 [00:07<00:00, 13.13it/s]

</pre>

- 99%|█████████▉| 99/100 [00:07<00:00, 13.13it/s]

end{sphinxVerbatim}

99%|█████████▉| 99/100 [00:07<00:00, 13.13it/s]

- 100%|██████████| 100/100 [00:07<00:00, 12.50it/s]

</pre>

- 100%|██████████| 100/100 [00:07<00:00, 12.50it/s]

end{sphinxVerbatim}

100%|██████████| 100/100 [00:07<00:00, 12.50it/s]

100

The output is now a list of lists, where the inner list contains a set of results that has been generated with different instructions but the same sampled inputs. This is the input that would go to an Ensemble mapping function, to produce a PlotData instance that mixes results. Now, we can generate a plot comparing YLD in each population

[29]:

sampled_res = P.run_sampled_sims(parset,high_uncertainty_progset,progset_instructions=[default_budget,doubled_budget],n_samples=100,result_names=['Baseline','Doubled budget'])

- more-to-come:

- class:

stderr

- 0%| | 0/100 [00:00<?, ?it/s]

</pre>

- 0%| | 0/100 [00:00<?, ?it/s]

end{sphinxVerbatim}

0%| | 0/100 [00:00<?, ?it/s]

- more-to-come:

- class:

stderr

- 2%|▏ | 2/100 [00:00<00:05, 16.78it/s]

</pre>

- 2%|▏ | 2/100 [00:00<00:05, 16.78it/s]

end{sphinxVerbatim}

2%|▏ | 2/100 [00:00<00:05, 16.78it/s]

- more-to-come:

- class:

stderr

- 4%|▍ | 4/100 [00:00<00:06, 15.61it/s]

</pre>

- 4%|▍ | 4/100 [00:00<00:06, 15.61it/s]

end{sphinxVerbatim}

4%|▍ | 4/100 [00:00<00:06, 15.61it/s]

- more-to-come:

- class:

stderr

- 6%|▌ | 6/100 [00:00<00:06, 13.80it/s]

</pre>

- 6%|▌ | 6/100 [00:00<00:06, 13.80it/s]

end{sphinxVerbatim}

6%|▌ | 6/100 [00:00<00:06, 13.80it/s]

- more-to-come:

- class:

stderr

- 8%|▊ | 8/100 [00:00<00:06, 14.88it/s]

</pre>

- 8%|▊ | 8/100 [00:00<00:06, 14.88it/s]

end{sphinxVerbatim}

8%|▊ | 8/100 [00:00<00:06, 14.88it/s]

- more-to-come:

- class:

stderr

- 10%|█ | 10/100 [00:00<00:06, 14.23it/s]

</pre>

- 10%|█ | 10/100 [00:00<00:06, 14.23it/s]

end{sphinxVerbatim}

10%|█ | 10/100 [00:00<00:06, 14.23it/s]

- more-to-come:

- class:

stderr

- 12%|█▏ | 12/100 [00:00<00:06, 14.33it/s]

</pre>

- 12%|█▏ | 12/100 [00:00<00:06, 14.33it/s]

end{sphinxVerbatim}

12%|█▏ | 12/100 [00:00<00:06, 14.33it/s]

- more-to-come:

- class:

stderr

- 14%|█▍ | 14/100 [00:00<00:06, 13.84it/s]

</pre>

- 14%|█▍ | 14/100 [00:00<00:06, 13.84it/s]

end{sphinxVerbatim}

14%|█▍ | 14/100 [00:00<00:06, 13.84it/s]

- more-to-come:

- class:

stderr

- 16%|█▌ | 16/100 [00:01<00:05, 14.14it/s]

</pre>

- 16%|█▌ | 16/100 [00:01<00:05, 14.14it/s]

end{sphinxVerbatim}

16%|█▌ | 16/100 [00:01<00:05, 14.14it/s]

- more-to-come:

- class:

stderr

- 18%|█▊ | 18/100 [00:01<00:05, 14.89it/s]

</pre>

- 18%|█▊ | 18/100 [00:01<00:05, 14.89it/s]

end{sphinxVerbatim}

18%|█▊ | 18/100 [00:01<00:05, 14.89it/s]

- more-to-come:

- class:

stderr

- 20%|██ | 20/100 [00:01<00:09, 8.87it/s]

</pre>

- 20%|██ | 20/100 [00:01<00:09, 8.87it/s]

end{sphinxVerbatim}

20%|██ | 20/100 [00:01<00:09, 8.87it/s]

- more-to-come:

- class:

stderr

- 22%|██▏ | 22/100 [00:01<00:07, 10.16it/s]

</pre>

- 22%|██▏ | 22/100 [00:01<00:07, 10.16it/s]

end{sphinxVerbatim}

22%|██▏ | 22/100 [00:01<00:07, 10.16it/s]

- more-to-come:

- class:

stderr

- 24%|██▍ | 24/100 [00:01<00:07, 10.17it/s]

</pre>

- 24%|██▍ | 24/100 [00:01<00:07, 10.17it/s]

end{sphinxVerbatim}

24%|██▍ | 24/100 [00:01<00:07, 10.17it/s]

- more-to-come:

- class:

stderr

- 26%|██▌ | 26/100 [00:02<00:06, 11.50it/s]

</pre>

- 26%|██▌ | 26/100 [00:02<00:06, 11.50it/s]

end{sphinxVerbatim}

26%|██▌ | 26/100 [00:02<00:06, 11.50it/s]

- more-to-come:

- class:

stderr

- 28%|██▊ | 28/100 [00:02<00:05, 12.44it/s]

</pre>

- 28%|██▊ | 28/100 [00:02<00:05, 12.44it/s]

end{sphinxVerbatim}

28%|██▊ | 28/100 [00:02<00:05, 12.44it/s]

- more-to-come:

- class:

stderr

- 30%|███ | 30/100 [00:02<00:05, 11.70it/s]

</pre>

- 30%|███ | 30/100 [00:02<00:05, 11.70it/s]

end{sphinxVerbatim}

30%|███ | 30/100 [00:02<00:05, 11.70it/s]

- more-to-come:

- class:

stderr

- 32%|███▏ | 32/100 [00:02<00:05, 12.91it/s]

</pre>

- 32%|███▏ | 32/100 [00:02<00:05, 12.91it/s]

end{sphinxVerbatim}

32%|███▏ | 32/100 [00:02<00:05, 12.91it/s]

- more-to-come:

- class:

stderr

- 34%|███▍ | 34/100 [00:02<00:05, 11.94it/s]

</pre>

- 34%|███▍ | 34/100 [00:02<00:05, 11.94it/s]

end{sphinxVerbatim}

34%|███▍ | 34/100 [00:02<00:05, 11.94it/s]

- more-to-come:

- class:

stderr

- 36%|███▌ | 36/100 [00:02<00:04, 13.12it/s]

</pre>

- 36%|███▌ | 36/100 [00:02<00:04, 13.12it/s]

end{sphinxVerbatim}

36%|███▌ | 36/100 [00:02<00:04, 13.12it/s]

- more-to-come:

- class:

stderr

- 38%|███▊ | 38/100 [00:03<00:04, 13.68it/s]

</pre>

- 38%|███▊ | 38/100 [00:03<00:04, 13.68it/s]

end{sphinxVerbatim}

38%|███▊ | 38/100 [00:03<00:04, 13.68it/s]

- more-to-come:

- class:

stderr

- 40%|████ | 40/100 [00:03<00:04, 12.15it/s]

</pre>

- 40%|████ | 40/100 [00:03<00:04, 12.15it/s]

end{sphinxVerbatim}

40%|████ | 40/100 [00:03<00:04, 12.15it/s]

- more-to-come:

- class:

stderr

- 42%|████▏ | 42/100 [00:03<00:04, 12.53it/s]

</pre>

- 42%|████▏ | 42/100 [00:03<00:04, 12.53it/s]

end{sphinxVerbatim}

42%|████▏ | 42/100 [00:03<00:04, 12.53it/s]

- more-to-come:

- class:

stderr

- 44%|████▍ | 44/100 [00:03<00:04, 12.07it/s]

</pre>

- 44%|████▍ | 44/100 [00:03<00:04, 12.07it/s]

end{sphinxVerbatim}

44%|████▍ | 44/100 [00:03<00:04, 12.07it/s]

- more-to-come:

- class:

stderr

- 46%|████▌ | 46/100 [00:03<00:04, 13.29it/s]

</pre>

- 46%|████▌ | 46/100 [00:03<00:04, 13.29it/s]

end{sphinxVerbatim}

46%|████▌ | 46/100 [00:03<00:04, 13.29it/s]

- more-to-come:

- class:

stderr

- 48%|████▊ | 48/100 [00:03<00:03, 13.66it/s]

</pre>

- 48%|████▊ | 48/100 [00:03<00:03, 13.66it/s]

end{sphinxVerbatim}

48%|████▊ | 48/100 [00:03<00:03, 13.66it/s]

- more-to-come:

- class:

stderr

- 50%|█████ | 50/100 [00:03<00:04, 12.34it/s]

</pre>

- 50%|█████ | 50/100 [00:03<00:04, 12.34it/s]

end{sphinxVerbatim}

50%|█████ | 50/100 [00:03<00:04, 12.34it/s]

- more-to-come:

- class:

stderr

- 52%|█████▏ | 52/100 [00:04<00:03, 13.07it/s]

</pre>

- 52%|█████▏ | 52/100 [00:04<00:03, 13.07it/s]

end{sphinxVerbatim}

52%|█████▏ | 52/100 [00:04<00:03, 13.07it/s]

- more-to-come:

- class:

stderr

- 54%|█████▍ | 54/100 [00:04<00:03, 14.01it/s]

</pre>

- 54%|█████▍ | 54/100 [00:04<00:03, 14.01it/s]

end{sphinxVerbatim}

54%|█████▍ | 54/100 [00:04<00:03, 14.01it/s]

- more-to-come:

- class:

stderr

- 56%|█████▌ | 56/100 [00:04<00:02, 14.88it/s]

</pre>

- 56%|█████▌ | 56/100 [00:04<00:02, 14.88it/s]

end{sphinxVerbatim}

56%|█████▌ | 56/100 [00:04<00:02, 14.88it/s]

- more-to-come:

- class:

stderr

- 58%|█████▊ | 58/100 [00:04<00:03, 12.60it/s]

</pre>

- 58%|█████▊ | 58/100 [00:04<00:03, 12.60it/s]

end{sphinxVerbatim}

58%|█████▊ | 58/100 [00:04<00:03, 12.60it/s]

- more-to-come:

- class:

stderr

- 60%|██████ | 60/100 [00:04<00:03, 12.82it/s]

</pre>

- 60%|██████ | 60/100 [00:04<00:03, 12.82it/s]

end{sphinxVerbatim}

60%|██████ | 60/100 [00:04<00:03, 12.82it/s]

- more-to-come:

- class:

stderr

- 62%|██████▏ | 62/100 [00:04<00:02, 13.66it/s]

</pre>

- 62%|██████▏ | 62/100 [00:04<00:02, 13.66it/s]

end{sphinxVerbatim}

62%|██████▏ | 62/100 [00:04<00:02, 13.66it/s]

- more-to-come:

- class:

stderr

- 64%|██████▍ | 64/100 [00:05<00:03, 11.74it/s]

</pre>

- 64%|██████▍ | 64/100 [00:05<00:03, 11.74it/s]

end{sphinxVerbatim}

64%|██████▍ | 64/100 [00:05<00:03, 11.74it/s]

- more-to-come:

- class:

stderr

- 66%|██████▌ | 66/100 [00:05<00:04, 8.08it/s]

</pre>

- 66%|██████▌ | 66/100 [00:05<00:04, 8.08it/s]

end{sphinxVerbatim}

66%|██████▌ | 66/100 [00:05<00:04, 8.08it/s]

- more-to-come:

- class:

stderr

- 68%|██████▊ | 68/100 [00:05<00:03, 9.17it/s]

</pre>

- 68%|██████▊ | 68/100 [00:05<00:03, 9.17it/s]

end{sphinxVerbatim}

68%|██████▊ | 68/100 [00:05<00:03, 9.17it/s]

- more-to-come:

- class:

stderr

- 70%|███████ | 70/100 [00:05<00:03, 9.68it/s]

</pre>

- 70%|███████ | 70/100 [00:05<00:03, 9.68it/s]

end{sphinxVerbatim}

70%|███████ | 70/100 [00:05<00:03, 9.68it/s]

- more-to-come:

- class:

stderr

- 72%|███████▏ | 72/100 [00:05<00:02, 11.13it/s]

</pre>

- 72%|███████▏ | 72/100 [00:05<00:02, 11.13it/s]

end{sphinxVerbatim}

72%|███████▏ | 72/100 [00:05<00:02, 11.13it/s]

- more-to-come:

- class:

stderr

- 74%|███████▍ | 74/100 [00:06<00:02, 12.47it/s]

</pre>

- 74%|███████▍ | 74/100 [00:06<00:02, 12.47it/s]

end{sphinxVerbatim}

74%|███████▍ | 74/100 [00:06<00:02, 12.47it/s]

- more-to-come:

- class:

stderr

- 76%|███████▌ | 76/100 [00:06<00:01, 12.79it/s]

</pre>

- 76%|███████▌ | 76/100 [00:06<00:01, 12.79it/s]

end{sphinxVerbatim}

76%|███████▌ | 76/100 [00:06<00:01, 12.79it/s]

- more-to-come:

- class:

stderr

- 78%|███████▊ | 78/100 [00:06<00:01, 13.04it/s]

</pre>

- 78%|███████▊ | 78/100 [00:06<00:01, 13.04it/s]

end{sphinxVerbatim}

78%|███████▊ | 78/100 [00:06<00:01, 13.04it/s]

- more-to-come:

- class:

stderr

- 80%|████████ | 80/100 [00:06<00:01, 10.85it/s]

</pre>

- 80%|████████ | 80/100 [00:06<00:01, 10.85it/s]

end{sphinxVerbatim}

80%|████████ | 80/100 [00:06<00:01, 10.85it/s]

- more-to-come:

- class:

stderr

- 82%|████████▏ | 82/100 [00:06<00:01, 11.53it/s]

</pre>

- 82%|████████▏ | 82/100 [00:06<00:01, 11.53it/s]

end{sphinxVerbatim}

82%|████████▏ | 82/100 [00:06<00:01, 11.53it/s]

- more-to-come:

- class:

stderr

- 84%|████████▍ | 84/100 [00:06<00:01, 12.43it/s]

</pre>

- 84%|████████▍ | 84/100 [00:06<00:01, 12.43it/s]

end{sphinxVerbatim}

84%|████████▍ | 84/100 [00:06<00:01, 12.43it/s]

- more-to-come:

- class:

stderr

- 86%|████████▌ | 86/100 [00:07<00:01, 13.02it/s]

</pre>

- 86%|████████▌ | 86/100 [00:07<00:01, 13.02it/s]

end{sphinxVerbatim}

86%|████████▌ | 86/100 [00:07<00:01, 13.02it/s]

- more-to-come:

- class:

stderr

- 88%|████████▊ | 88/100 [00:07<00:00, 12.82it/s]

</pre>

- 88%|████████▊ | 88/100 [00:07<00:00, 12.82it/s]

end{sphinxVerbatim}

88%|████████▊ | 88/100 [00:07<00:00, 12.82it/s]

- more-to-come:

- class:

stderr

- 90%|█████████ | 90/100 [00:07<00:00, 13.07it/s]

</pre>

- 90%|█████████ | 90/100 [00:07<00:00, 13.07it/s]

end{sphinxVerbatim}

90%|█████████ | 90/100 [00:07<00:00, 13.07it/s]

- more-to-come:

- class:

stderr

- 92%|█████████▏| 92/100 [00:07<00:00, 14.08it/s]

</pre>

- 92%|█████████▏| 92/100 [00:07<00:00, 14.08it/s]

end{sphinxVerbatim}

92%|█████████▏| 92/100 [00:07<00:00, 14.08it/s]

- more-to-come:

- class:

stderr

- 94%|█████████▍| 94/100 [00:07<00:00, 12.71it/s]

</pre>

- 94%|█████████▍| 94/100 [00:07<00:00, 12.71it/s]

end{sphinxVerbatim}

94%|█████████▍| 94/100 [00:07<00:00, 12.71it/s]

- more-to-come:

- class:

stderr

- 96%|█████████▌| 96/100 [00:07<00:00, 13.36it/s]

</pre>

- 96%|█████████▌| 96/100 [00:07<00:00, 13.36it/s]

end{sphinxVerbatim}

96%|█████████▌| 96/100 [00:07<00:00, 13.36it/s]

- more-to-come:

- class:

stderr

- 98%|█████████▊| 98/100 [00:07<00:00, 14.30it/s]

</pre>

- 98%|█████████▊| 98/100 [00:07<00:00, 14.30it/s]

end{sphinxVerbatim}

98%|█████████▊| 98/100 [00:07<00:00, 14.30it/s]

- 100%|██████████| 100/100 [00:08<00:00, 14.01it/s]

</pre>

- 100%|██████████| 100/100 [00:08<00:00, 14.01it/s]

end{sphinxVerbatim}

100%|██████████| 100/100 [00:08<00:00, 14.01it/s]

- 100%|██████████| 100/100 [00:08<00:00, 12.44it/s]

</pre>

- 100%|██████████| 100/100 [00:08<00:00, 12.44it/s]

end{sphinxVerbatim}

100%|██████████| 100/100 [00:08<00:00, 12.44it/s]

[30]:

mapping_function = lambda x: at.PlotData(x,outputs=['screen','diag','initiate'],pops='m_rural')

ensemble = at.Ensemble(name='Example',mapping_function=mapping_function)

ensemble.update(sampled_res)

ensemble.plot_series();

[31]:

ensemble = at.Ensemble(name='Example',mapping_function=yld)

ensemble.update(sampled_res)

ensemble.plot_bars();

To aid comparison, it may be clearer to plot individual populations at a time. For example:

[32]:

ensemble.plot_bars(pops='m_urban');

In this case, there isn’t much difference because as shown in the time series, most of the variability is encountered in the initial simulation years. We can see this if we plot a quantity with more variability - for example, the number of undiagnosed people in the first simulation year

[33]:

undx = lambda x: at.PlotData(x,outputs='undx',pops='m_urban')

ensemble = at.Ensemble(name='Example',mapping_function=undx)

ensemble.update(sampled_res)

ensemble.plot_bars(years=2017);

If you pass in a figure to Ensemble.plot_bars(), it will attempt to append new bars into the existing figure. For example:

[34]:

fig = ensemble.plot_bars(years=2017);

ensemble.plot_bars(years=2018,fig=fig);

In this case, it was not essential to do this because multiple years can be plotted in a single plot_bars call. However, you could superimpose bars from entirely separate Ensemble objects, if you wanted to compare quantities computed using different mapping functions.

Another important type of plot is the sampled distribution of a quantity. Effectively, the entire Atomica model is a function that maps probabilitity distributions for model inputs into distributions of model outputs. These distributions can be plotted directly. For example:

[35]:

ensemble.plot_distribution(pops='m_urban');

The distributions that are plotted are effectively vertical cross-sections of the time series plots. They can be important to inspect to check for multi-modal distributions, or asymmetric distributions, which have implications for the validity of reporting summary statistics.

There are two ways to compare distributions

Within an Ensemble

Comparing multiple outputs/pops

Comparing multiple results

Across Ensembles

Typically would be comparing corresponding outputs/pops

To cater for the former, if a PlotData instance contains multiple years, results, outputs, or pops, they will all be shown on the distribution figure unless filtered using arguments to Ensemble.plot_distribution. For the latter, subsequent calls to Ensemble.plot_distribution can pass in a Figure, which will superimpose the distributions onto the existing figure. For example:

[36]:

ensemble = at.Ensemble(lambda x: at.PlotData(x)) # All compartments

ensemble.update(sampled_res)

ensemble.plot_distribution(pops='m_rural',outputs='undx');

Distributions of differences between results¶

One of the most important analyses is quantifying the difference between one budget scenario and another - for example, the improvement from optimizing the budget. In the presence of uncertainty, this difference becomes a distribution. However, samples from this distribution must be computed pairwise - from the same sampled inputs, test both budgets, compute the difference between them, and then store the difference. This can be computed by defining a mapping function that return a PlotData

containing differences rather than raw values. For example

[37]:

def get_differences(res):

d1 = at.PlotData(res[0],outputs={'disease':['undx','scr','dx','tx']},t_bins=[2018,2023],time_aggregation='integrate')

d2 = at.PlotData(res[1],outputs={'disease':['undx','scr','dx','tx']},t_bins=[2018,2023],time_aggregation='integrate')

return d2-d1

Note that the PlotData class implements operators for subtraction and division, to facilitate comparing derived/aggregated quantities. We can now create an Ensemble with this mapping function, add the results, and plot the distribution of differences:

[38]:

ensemble = at.Ensemble(get_differences,'difference')

ensemble.update(sampled_res)

ensemble.plot_distribution(pops='m_rural');

Cascade ensembles¶

Plotting uncertainty on cascades is a common task. However, the cascade plotting functions normally operate directly on Result objects, rather than PlotData which forms the basis of data storage in Ensembles. For normal analysis without uncertainty, this facilitates ease of use by not requiring an intermediate PlotData step when producing standard cascade plots. For uncertainty, the simplest option is to construct a PlotData object containing the required cascade values, and to

generate the plot via Ensemble.plot_bars (the key difference between Ensemble.plot_bars and plotting.plot_bars is that Ensemble.plot_bars doesn’t support any stacking - which would be potentially misleading with uncertainty - and therefore supports arbitrary ordering of the bars, whereas plotting.plot_bars requires that the inner group consist of outputs or pops only, as these are the only valid stacking targets).

To facilitate generation of the appropriate mapping function and arguments to Ensemble.plot_bars, this functionality is built into the CascadeEnsemble class, which inherits from Ensemble. The constructor for CascadeEnsemble takes in the name of the cascade to plot. It also requires a ProjectFramework to be passed in, which provides the definition of the cascade being requested:

[39]:

cascade_ensemble = at.CascadeEnsemble(P.framework, 'main')

Results can be loaded into the cascade ensemble as normal

[40]:

cascade_ensemble.update(sampled_res)

A cascade plot can then be plotted using CascadeEnsemble.plot_multi_cascade():

[41]:

cascade_ensemble.plot_multi_cascade(years=2018)

[41]:

Notice how the bars are automatically grouped by cascade stage and labelled appropriately. It’s also possible to call standard plotting functions that are inherited from the Ensemble class. For example, we could plot the time series of cascade stages using Ensemble.plot_series:

[42]:

cascade_ensemble.plot_series(results='Baseline',pops='m_rural');

Virtual stages¶

Normal cascades must be formed from compartments and characteristics only, and these stages are automatically validated to ensure proper nesting. However, because CascadeEnsemble.plot_multi_cascade is based around PlotData it is possible to display a cascade-like plot where stages are defined using arbitrary PlotData operations. That is, the CascadeEnsemble does not require that the cascade is actually valid. This opens up the possibility of generating cascade-like plots with

‘virtual stages’ that don’t actually otherwise exist. An example from an actual application is the introduction of a ‘Pre-diagnosed’ stage where application data has (separately) indicated that about 80% of screened individual would be in this pre-diagnosed stage. The cascade could be defined as:

[43]:

cascade = {

'Prevalent':'all_people',

'Screened':'all_screened',

'Pre-diagnosed':'0.8*all_screened',

'Diagnosed':'all_dx',

'Treated':'all_tx',

'Controlled':'all_con'

}

noting that the ‘pre-diagnosed’ stage is the result of a calculation and is thus not a compartment or characteristic at all. The cascade specification here is simply any output dict supported by PlotData. We can then create a CascadeEnsemble using this cascade, and plot it

[44]:

cascade_ensemble = at.CascadeEnsemble(P.framework, cascade)

cascade_ensemble.update(sampled_res)

fig = cascade_ensemble.plot_multi_cascade(years=2018)

fig.set_size_inches(8,4)

Notice that because the model outputs are converted to PlotData on a per-sample basis, uncertainty is automatically propagated to the derived quantity (i.e. ‘pre-diagnosed’ has uncertainty even though it was defined on the fly). This would be the case for any PlotData operation.

Memory-limited environments¶

A common issue that may occur when sampling large models is that there may be insufficient RAM to store all of the Result objects in memory prior to adding them to an Ensemble. The PlotData stored within an Ensemble is typically orders of magnitude smaller than the raw results - in the first instance, it only contains a small subset of the model outputs. A large model might have on the order of 150 parameters, 50 compartments, and 150 links. A typical Ensemble may have track

5-10 quantities, which represents a ~50x reduction in size. Further, the raw output has values at every timestep, which could be on the order of 100 values (e.g. a 25 year simulation with quarterly timesteps) but it may only be desired to compare simulations for a few time points - which could provide another ~20x reduction in size.

In sum, the strategy for dealing with this situation is

Define a mapping function that leads to a sufficient reduction in size of the outputs

Add results to the Ensemble as they are generated, rather than keeping them

For reducing the size of the mapping function, we have already seen examples of mapping functions that select only a subset of the outputs or populations. For time, an additional call to PlotData.interpolate() can be added to retain the values only at times of interest. For example:

[45]:

store_minimal = lambda x: at.PlotData(x,outputs=['screen','diag','initiate'],pops='m_rural').interpolate(2020)

ensemble = at.Ensemble(store_minimal)

Then, to perform the sampling, we simply have to generate results one at a time, rather than all at once

[46]:

for _ in range(100):

result = P.run_sampled_sims(parset,high_uncertainty_progset,default_budget,n_samples=1)

ensemble.add(result[0])

Elapsed time for running "default": 0.0284s

Elapsed time for running "default": 0.373s

Elapsed time for running "default": 0.0270s

Elapsed time for running "default": 0.0259s

Elapsed time for running "default": 0.0262s

Elapsed time for running "default": 0.0280s

Elapsed time for running "default": 0.0264s

Elapsed time for running "default": 0.0270s

Elapsed time for running "default": 0.0275s

Elapsed time for running "default": 0.0274s

Elapsed time for running "default": 0.0274s

Elapsed time for running "default": 0.0290s

Elapsed time for running "default": 0.0274s

Elapsed time for running "default": 0.0268s

Elapsed time for running "default": 0.0280s

Elapsed time for running "default": 0.0291s

Elapsed time for running "default": 0.0273s

Elapsed time for running "default": 0.0284s

Elapsed time for running "default": 0.0301s

Elapsed time for running "default": 0.0273s

Elapsed time for running "default": 0.0267s

Elapsed time for running "default": 0.0285s

Elapsed time for running "default": 0.0271s

Elapsed time for running "default": 0.0275s

Elapsed time for running "default": 0.0284s

Elapsed time for running "default": 0.0281s

Elapsed time for running "default": 0.0288s

Elapsed time for running "default": 0.0284s

Elapsed time for running "default": 0.0274s

Elapsed time for running "default": 0.0272s

Elapsed time for running "default": 0.0276s

Elapsed time for running "default": 0.0273s

Elapsed time for running "default": 0.0273s

Elapsed time for running "default": 0.0301s

Elapsed time for running "default": 0.0270s

Elapsed time for running "default": 0.0296s

Elapsed time for running "default": 0.0304s

Elapsed time for running "default": 0.0270s

Elapsed time for running "default": 0.0277s

Elapsed time for running "default": 0.0276s

Elapsed time for running "default": 0.0286s

Elapsed time for running "default": 0.0293s

Elapsed time for running "default": 0.0276s

Elapsed time for running "default": 0.0334s

Elapsed time for running "default": 0.0278s

Elapsed time for running "default": 0.0287s

Elapsed time for running "default": 0.0296s

Elapsed time for running "default": 0.0294s

Elapsed time for running "default": 0.0305s

Elapsed time for running "default": 0.0277s

Elapsed time for running "default": 0.0292s

Elapsed time for running "default": 0.0281s

Elapsed time for running "default": 0.0275s

Elapsed time for running "default": 0.0277s

Elapsed time for running "default": 0.0281s

Elapsed time for running "default": 0.0277s

Elapsed time for running "default": 0.0268s

Elapsed time for running "default": 0.0274s

Elapsed time for running "default": 0.0276s

Elapsed time for running "default": 0.0284s

Elapsed time for running "default": 0.0269s

Elapsed time for running "default": 0.0280s

Elapsed time for running "default": 0.0292s

Elapsed time for running "default": 0.0275s

Elapsed time for running "default": 0.0276s

Elapsed time for running "default": 0.0278s

Elapsed time for running "default": 0.0306s

Elapsed time for running "default": 0.0300s

Elapsed time for running "default": 0.0278s

Elapsed time for running "default": 0.0282s

Elapsed time for running "default": 0.0272s

Elapsed time for running "default": 0.0276s

Elapsed time for running "default": 0.0276s

Elapsed time for running "default": 0.0286s

Elapsed time for running "default": 0.0278s

Elapsed time for running "default": 0.0275s

Elapsed time for running "default": 0.0285s

Elapsed time for running "default": 0.0279s

Elapsed time for running "default": 0.0282s

Elapsed time for running "default": 0.0282s

Elapsed time for running "default": 0.0290s

Elapsed time for running "default": 0.0272s

Elapsed time for running "default": 0.0276s

Elapsed time for running "default": 0.0283s

Elapsed time for running "default": 0.0294s

Elapsed time for running "default": 0.0304s

Elapsed time for running "default": 0.0278s

Elapsed time for running "default": 0.0289s

Elapsed time for running "default": 0.0276s

Elapsed time for running "default": 0.0280s

Elapsed time for running "default": 0.0294s

Elapsed time for running "default": 0.0294s

Elapsed time for running "default": 0.0286s

Elapsed time for running "default": 0.0280s

Elapsed time for running "default": 0.0272s

Elapsed time for running "default": 0.0291s

Elapsed time for running "default": 0.0273s

Elapsed time for running "default": 0.0281s

Elapsed time for running "default": 0.0296s

Elapsed time for running "default": 0.0270s

Now, the peak amount of memory required is only that of 1 simulation. We can then plot the ensemble as usual:

[47]:

ensemble.plot_bars();

To perform this procedure seamlessly, instead of calling P.run_sampled_sims, you can instead call Ensemble.run_sims. The idea is that Ensemble.run_sims runs simulations specifically for that Ensemble - instead of returning results, it adds them to the Ensemble. Under the hood, it adds the results as they are generated without storing them internally.

[48]:

ensemble = at.Ensemble(store_minimal)

ensemble.run_sims(proj=P,parset=parset,progset=high_uncertainty_progset,progset_instructions=default_budget,n_samples=100)

ensemble.plot_bars();

- more-to-come:

- class:

stderr

- 0%| | 0/100 [00:00<?, ?it/s]

</pre>

- 0%| | 0/100 [00:00<?, ?it/s]

end{sphinxVerbatim}

0%| | 0/100 [00:00<?, ?it/s]

- more-to-come:

- class:

stderr

- 4%|▍ | 4/100 [00:00<00:03, 27.69it/s]

</pre>

- 4%|▍ | 4/100 [00:00<00:03, 27.69it/s]

end{sphinxVerbatim}

4%|▍ | 4/100 [00:00<00:03, 27.69it/s]

- more-to-come:

- class:

stderr

- 8%|▊ | 8/100 [00:00<00:03, 27.84it/s]

</pre>

- 8%|▊ | 8/100 [00:00<00:03, 27.84it/s]

end{sphinxVerbatim}

8%|▊ | 8/100 [00:00<00:03, 27.84it/s]

- more-to-come:

- class:

stderr

- 11%|█ | 11/100 [00:00<00:03, 27.49it/s]

</pre>

- 11%|█ | 11/100 [00:00<00:03, 27.49it/s]

end{sphinxVerbatim}

11%|█ | 11/100 [00:00<00:03, 27.49it/s]

- more-to-come:

- class:

stderr

- 14%|█▍ | 14/100 [00:00<00:03, 25.90it/s]

</pre>

- 14%|█▍ | 14/100 [00:00<00:03, 25.90it/s]

end{sphinxVerbatim}

14%|█▍ | 14/100 [00:00<00:03, 25.90it/s]

- more-to-come:

- class:

stderr

- 17%|█▋ | 17/100 [00:00<00:03, 23.86it/s]

</pre>

- 17%|█▋ | 17/100 [00:00<00:03, 23.86it/s]

end{sphinxVerbatim}

17%|█▋ | 17/100 [00:00<00:03, 23.86it/s]

- more-to-come:

- class:

stderr

- 20%|██ | 20/100 [00:00<00:03, 21.15it/s]

</pre>

- 20%|██ | 20/100 [00:00<00:03, 21.15it/s]

end{sphinxVerbatim}

20%|██ | 20/100 [00:00<00:03, 21.15it/s]

- more-to-come:

- class:

stderr

- 23%|██▎ | 23/100 [00:01<00:03, 20.40it/s]

</pre>

- 23%|██▎ | 23/100 [00:01<00:03, 20.40it/s]

end{sphinxVerbatim}

23%|██▎ | 23/100 [00:01<00:03, 20.40it/s]

- more-to-come:

- class:

stderr

- 26%|██▌ | 26/100 [00:01<00:03, 21.26it/s]

</pre>

- 26%|██▌ | 26/100 [00:01<00:03, 21.26it/s]

end{sphinxVerbatim}

26%|██▌ | 26/100 [00:01<00:03, 21.26it/s]

- more-to-come:

- class:

stderr

- 30%|███ | 30/100 [00:01<00:02, 24.05it/s]

</pre>

- 30%|███ | 30/100 [00:01<00:02, 24.05it/s]

end{sphinxVerbatim}

30%|███ | 30/100 [00:01<00:02, 24.05it/s]

- more-to-come:

- class:

stderr

- 33%|███▎ | 33/100 [00:01<00:03, 22.26it/s]

</pre>

- 33%|███▎ | 33/100 [00:01<00:03, 22.26it/s]

end{sphinxVerbatim}

33%|███▎ | 33/100 [00:01<00:03, 22.26it/s]

- more-to-come:

- class:

stderr

- 36%|███▌ | 36/100 [00:01<00:02, 21.83it/s]

</pre>

- 36%|███▌ | 36/100 [00:01<00:02, 21.83it/s]

end{sphinxVerbatim}

36%|███▌ | 36/100 [00:01<00:02, 21.83it/s]

- more-to-come:

- class:

stderr

- 39%|███▉ | 39/100 [00:01<00:02, 22.37it/s]

</pre>

- 39%|███▉ | 39/100 [00:01<00:02, 22.37it/s]

end{sphinxVerbatim}

39%|███▉ | 39/100 [00:01<00:02, 22.37it/s]

- more-to-come:

- class:

stderr

- 42%|████▏ | 42/100 [00:01<00:02, 22.01it/s]

</pre>

- 42%|████▏ | 42/100 [00:01<00:02, 22.01it/s]

end{sphinxVerbatim}

42%|████▏ | 42/100 [00:01<00:02, 22.01it/s]

- more-to-come:

- class:

stderr

- 46%|████▌ | 46/100 [00:01<00:02, 23.94it/s]

</pre>

- 46%|████▌ | 46/100 [00:01<00:02, 23.94it/s]

end{sphinxVerbatim}

46%|████▌ | 46/100 [00:01<00:02, 23.94it/s]

- more-to-come:

- class:

stderr

- 49%|████▉ | 49/100 [00:02<00:02, 24.78it/s]

</pre>

- 49%|████▉ | 49/100 [00:02<00:02, 24.78it/s]

end{sphinxVerbatim}

49%|████▉ | 49/100 [00:02<00:02, 24.78it/s]

- more-to-come:

- class:

stderr

- 52%|█████▏ | 52/100 [00:02<00:02, 23.66it/s]

</pre>

- 52%|█████▏ | 52/100 [00:02<00:02, 23.66it/s]

end{sphinxVerbatim}

52%|█████▏ | 52/100 [00:02<00:02, 23.66it/s]

- more-to-come:

- class:

stderr

- 55%|█████▌ | 55/100 [00:02<00:02, 18.94it/s]

</pre>

- 55%|█████▌ | 55/100 [00:02<00:02, 18.94it/s]

end{sphinxVerbatim}

55%|█████▌ | 55/100 [00:02<00:02, 18.94it/s]

- more-to-come:

- class:

stderr

- 58%|█████▊ | 58/100 [00:02<00:02, 20.03it/s]

</pre>

- 58%|█████▊ | 58/100 [00:02<00:02, 20.03it/s]

end{sphinxVerbatim}

58%|█████▊ | 58/100 [00:02<00:02, 20.03it/s]

- more-to-come:

- class:

stderr

- 61%|██████ | 61/100 [00:02<00:01, 20.40it/s]

</pre>

- 61%|██████ | 61/100 [00:02<00:01, 20.40it/s]

end{sphinxVerbatim}

61%|██████ | 61/100 [00:02<00:01, 20.40it/s]

- more-to-come:

- class:

stderr

- 64%|██████▍ | 64/100 [00:02<00:01, 19.35it/s]

</pre>

- 64%|██████▍ | 64/100 [00:02<00:01, 19.35it/s]

end{sphinxVerbatim}

64%|██████▍ | 64/100 [00:02<00:01, 19.35it/s]

- more-to-come:

- class:

stderr

- 67%|██████▋ | 67/100 [00:03<00:01, 21.10it/s]

</pre>

- 67%|██████▋ | 67/100 [00:03<00:01, 21.10it/s]

end{sphinxVerbatim}

67%|██████▋ | 67/100 [00:03<00:01, 21.10it/s]

- more-to-come:

- class:

stderr

- 71%|███████ | 71/100 [00:03<00:01, 22.40it/s]

</pre>

- 71%|███████ | 71/100 [00:03<00:01, 22.40it/s]

end{sphinxVerbatim}

71%|███████ | 71/100 [00:03<00:01, 22.40it/s]

- more-to-come:

- class:

stderr

- 74%|███████▍ | 74/100 [00:03<00:01, 14.52it/s]

</pre>

- 74%|███████▍ | 74/100 [00:03<00:01, 14.52it/s]

end{sphinxVerbatim}

74%|███████▍ | 74/100 [00:03<00:01, 14.52it/s]

- more-to-come:

- class:

stderr

- 77%|███████▋ | 77/100 [00:03<00:01, 16.82it/s]

</pre>

- 77%|███████▋ | 77/100 [00:03<00:01, 16.82it/s]

end{sphinxVerbatim}

77%|███████▋ | 77/100 [00:03<00:01, 16.82it/s]

- more-to-come:

- class:

stderr

- 80%|████████ | 80/100 [00:03<00:01, 18.89it/s]

</pre>

- 80%|████████ | 80/100 [00:03<00:01, 18.89it/s]

end{sphinxVerbatim}

80%|████████ | 80/100 [00:03<00:01, 18.89it/s]

- more-to-come:

- class:

stderr

- 83%|████████▎ | 83/100 [00:03<00:00, 20.67it/s]

</pre>

- 83%|████████▎ | 83/100 [00:03<00:00, 20.67it/s]

end{sphinxVerbatim}

83%|████████▎ | 83/100 [00:03<00:00, 20.67it/s]

- more-to-come:

- class:

stderr

- 86%|████████▌ | 86/100 [00:04<00:00, 20.93it/s]

</pre>

- 86%|████████▌ | 86/100 [00:04<00:00, 20.93it/s]

end{sphinxVerbatim}

86%|████████▌ | 86/100 [00:04<00:00, 20.93it/s]

- more-to-come:

- class:

stderr

- 89%|████████▉ | 89/100 [00:04<00:00, 19.27it/s]

</pre>

- 89%|████████▉ | 89/100 [00:04<00:00, 19.27it/s]

end{sphinxVerbatim}

89%|████████▉ | 89/100 [00:04<00:00, 19.27it/s]

- more-to-come:

- class:

stderr

- 92%|█████████▏| 92/100 [00:04<00:00, 21.07it/s]

</pre>

- 92%|█████████▏| 92/100 [00:04<00:00, 21.07it/s]

end{sphinxVerbatim}

92%|█████████▏| 92/100 [00:04<00:00, 21.07it/s]

- more-to-come:

- class:

stderr

- 96%|█████████▌| 96/100 [00:04<00:00, 23.97it/s]

</pre>

- 96%|█████████▌| 96/100 [00:04<00:00, 23.97it/s]

end{sphinxVerbatim}

96%|█████████▌| 96/100 [00:04<00:00, 23.97it/s]

- 100%|██████████| 100/100 [00:04<00:00, 25.30it/s]

</pre>

- 100%|██████████| 100/100 [00:04<00:00, 25.30it/s]

end{sphinxVerbatim}

100%|██████████| 100/100 [00:04<00:00, 25.30it/s]

- 100%|██████████| 100/100 [00:04<00:00, 21.71it/s]

</pre>

- 100%|██████████| 100/100 [00:04<00:00, 21.71it/s]

end{sphinxVerbatim}

100%|██████████| 100/100 [00:04<00:00, 21.71it/s]

Note that if you use Ensemble.run_sims is that a baseline simulation without sampling will automatically be performed and stored in the Ensemble.

Use

P.run_sampled_simsif you can store all of the results in memory, which allows you to generate new Ensembles and plots without re-running the simulationsUse

Ensemble.run_simsif you don’t have enough memory to hold all of the results

Parallization¶